Learn how to orchestrate a multi-service blockchain validator stack using Docker Compose, including Sisu, Dheart, Deyes, Ganache, and MySQL, with health checks, logging, and multi-validator setups.

Learn how to orchestrate a multi-service blockchain validator stack using Docker Compose, including Sisu, Dheart, Deyes, Ganache, and MySQL, with health checks, logging, and multi-validator setups.

Running a blockchain validator requires orchestrating multiple interconnected services that must start in the correct order, communicate reliably, and maintain persistent state across restarts. Docker Compose provides an elegant solution for managing this complexity, allowing teams to define their entire validator infrastructure as code.

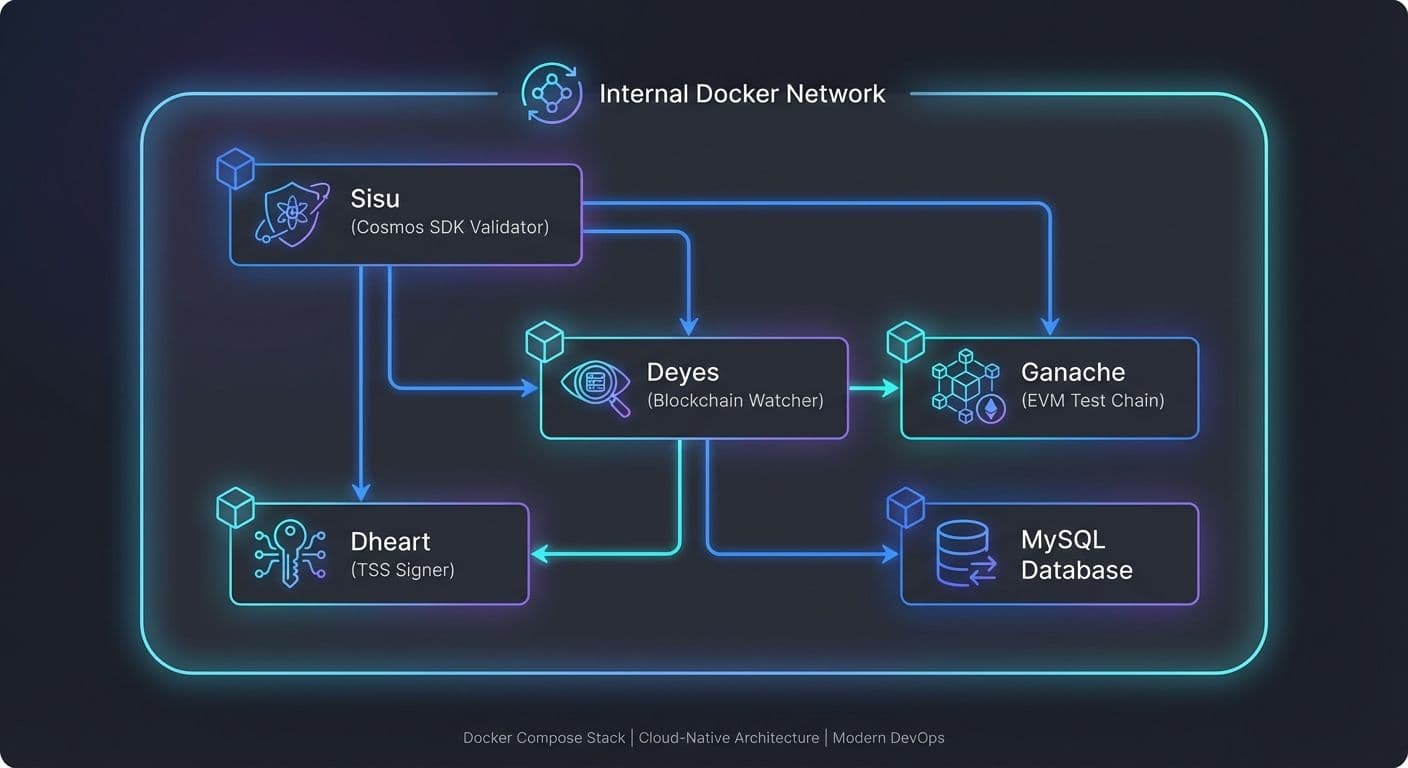

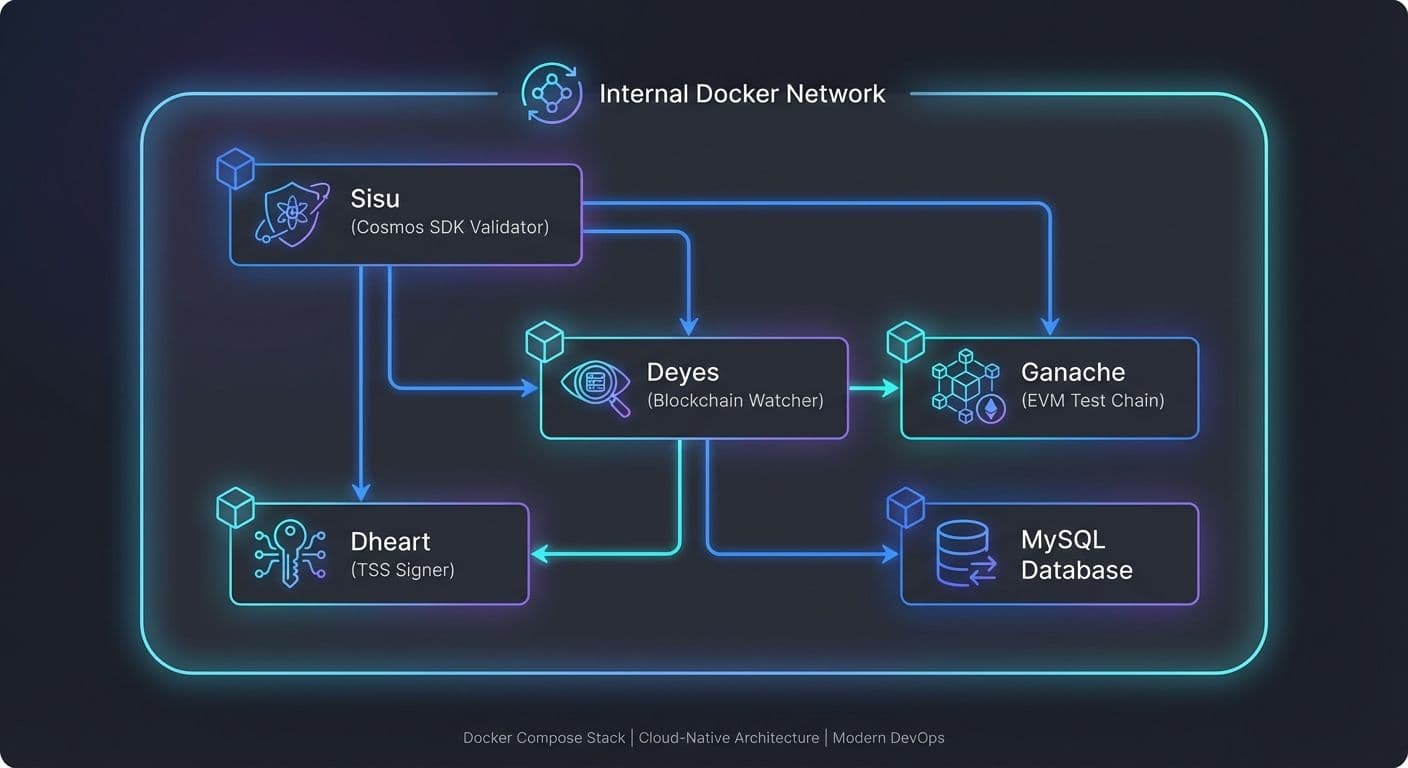

This guide explores practical patterns for building production-ready validator stacks, drawing from real-world experience running Sisu Network validators with their ecosystem of supporting services including Dheart (TSS signer), Deyes (blockchain watcher), Ganache (local EVM chains), and MySQL for state persistence.

Blockchain validators are rarely single-process applications. A typical validator node depends on several tightly-coupled components: the core consensus engine, cryptographic signing services, event watchers monitoring external chains, database backends, and often local test networks for development. Each service has its own startup requirements, health indicators, and failure modes.

Without proper orchestration, you face race conditions where services start before their dependencies are ready, data corruption from improper shutdown sequences, and debugging nightmares when logs are scattered across multiple terminals. Docker Compose solves these problems by providing declarative service definitions with explicit dependency graphs.

A well-designed validator stack follows a layered architecture. At the foundation sits your database layer, typically MySQL or PostgreSQL, providing persistent storage for validator state, transaction history, and configuration. Above this, blockchain watchers monitor external networks for relevant events. The signing layer handles threshold signature scheme (TSS) operations. Finally, the validator core coordinates consensus and block production.

For the Sisu Network stack, this translates to: MySQL as the persistence layer, Ganache instances simulating EVM chains for testing, Deyes watching blockchain events, Dheart managing distributed key generation and signing, and Sisu running the Cosmos SDK-based validator logic.

The most critical aspect of multi-service orchestration is managing startup order. Services must wait for their dependencies to be truly ready, not just running. Docker Compose's depends_on directive with health checks ensures proper sequencing.

For a blockchain validator stack, the typical startup sequence is: Database first (MySQL must accept connections), then blockchain simulators (Ganache instances need to be mining), followed by watchers (Deyes connects to both database and chains), then signing services (Dheart requires database and may need Deyes), and finally the validator core (Sisu depends on all previous services).

Each service should define a healthcheck that verifies actual readiness, not just process existence. For MySQL, this means checking that the server accepts queries. For Ganache, verify it responds to RPC calls. For application services, implement dedicated health endpoints.

Effective health checks distinguish between a process that's running and a service that's ready to handle requests. MySQL might be accepting TCP connections before it's finished initializing databases. A proper health check runs an actual query to verify the database is operational.

For blockchain nodes like Ganache, health checks should verify the RPC endpoint responds to basic queries like eth_blockNumber. This confirms the node is synced and processing requests. Timing parameters matter too: set intervals short enough to detect failures quickly but not so aggressive they overwhelm recovering services.

Blockchain validators maintain critical state that must survive container restarts: private keys, validator state, transaction history, and TSS key shares. Losing this data can result in slashing penalties or permanent loss of staked assets.

Docker named volumes provide the persistence layer. Define separate volumes for each service's data: one for MySQL databases, another for validator home directories containing keys, and dedicated volumes for any service maintaining local state. Named volumes persist across container rebuilds and can be backed up independently.

Never store sensitive keys in bind mounts from the host filesystem during production. Named volumes provide better isolation and can be encrypted at the Docker daemon level. For development, bind mounts offer convenience but require careful gitignore configuration to prevent accidental key commits.

A dedicated Docker network isolates validator services from other containers and provides automatic DNS resolution. Services reference each other by container name rather than IP address, simplifying configuration and surviving container restarts.

Minimize exposed ports to reduce attack surface. Only the services requiring external access need port mappings: perhaps the validator's P2P port for network participation and an RPC port for monitoring. Internal services like the database should never expose ports to the host.

Bridge networks work well for single-host deployments. For multi-node validator clusters, consider overlay networks that span multiple Docker hosts, though this adds complexity better handled by Kubernetes for production deployments.

Effective debugging requires centralized, searchable logs. Configure all services to log to stdout/stderr, letting Docker's logging driver handle collection. Use structured logging formats like JSON to enable log aggregation tools.

Set appropriate log rotation to prevent disk exhaustion. Blockchain validators can generate substantial log volume during normal operation, and even more during network incidents. Configure max-size and max-file options to bound disk usage while retaining enough history for debugging.

For production deployments, ship logs to external aggregation services. This provides persistence beyond container lifecycle, enables cross-service correlation, and allows alerting on error patterns. Tools like Loki, Elasticsearch, or cloud logging services integrate well with Docker's logging architecture.

Separate configuration from code using environment variables. Docker Compose supports .env files for local development and environment variable substitution in compose files. This enables the same compose file to work across development, staging, and production environments.

Sensitive values like database passwords and API keys should never appear in compose files committed to version control. Use Docker secrets for production or external secret management systems. For development, .env files work but must be gitignored.

Docker Compose integrates naturally into CI/CD pipelines. Use compose files for integration testing, spinning up complete validator stacks to verify cross-service interactions. The same compose configuration used in development ensures consistency through deployment.

Google Cloud Build, GitHub Actions, and GitLab CI all support Docker Compose. Build images, run integration tests against the full stack, and push verified images to registries. For blockchain validators, this might include automated tests that verify block production, transaction processing, and TSS signing ceremonies.

While Docker Compose excels for development and simple deployments, production validator infrastructure often outgrows its capabilities. Consider Kubernetes for multi-node deployments requiring automated failover, horizontal scaling, and sophisticated networking policies.

For single-node production deployments, Docker Compose remains viable with additional hardening: use restart policies to recover from crashes, implement external monitoring and alerting, establish backup procedures for volumes, and document recovery procedures.

Security hardening includes running containers as non-root users, using read-only filesystems where possible, limiting container capabilities, and implementing network policies. Validator keys require special attention: consider hardware security modules or dedicated key management services for production staking operations.

Docker Compose provides an effective foundation for blockchain validator infrastructure. Success requires understanding service dependencies, implementing proper health checks, managing persistent state carefully, and planning for observability from the start.

Start with a minimal configuration and add complexity as needed. Test dependency ordering by forcing service restarts. Verify data persists across stack rebuilds. Invest in logging early to ease future debugging. Most importantly, document your stack's architecture and recovery procedures before you need them.

Production-tested AWS WAF best practices for securing fintech applications, including recommended architecture, managed rule groups, CloudFormation configuration, monitoring, and common pitfalls to avoid.

Read moreHow we implemented centralized logging for four ECS Fargate microservices using Grafana Loki and Fluent Bit sidecars, cutting debugging time by 90% and reducing logging costs by 60%.

Read moreHow we used AWS Cloud Map to solve service discovery, health-aware routing, and configuration drift in a 7-microservice transport management system running on ECS.

Read more